In our last blog post of the 2022 #SummerofAI series, we discuss the importance of generative AI and synthetic data generation, fueled by the enormous advancements in AI research.

In our last two blog posts, we discussed The Rise of Intelligent Edge Devices with AI Acceleration and the democratization of AI tools and the potential of AI developers. In this article, the last one in the 2022 #SummerofAI series, we will discuss the importance of generative AI and synthetic data generation, fueled by the enormous advancements in AI research. Generative AI are AI models that can learn underlying structures and representation from the training data to generate completely new and original pieces with a fresh and unique AI output. This opens the door to many applications where rare data is needed and has big potential for a wide variety of use cases like we mentioned in our Top 10 list for 2022.

Generative AI on the Rise

Since 2021 and even more so in 2022, AI creations have resulted in some impressive end products, including human-like texts, poems, images, music, graphical art, and more. These AI models are mainly enabled by so-called transformer models like Microsoft’s Turing or GPT-3 that store billions of parameters that can only be handled in the cloud. Data generation is not only useful for artistic creations, but market researchers are also seeing transformational potential for many other industries. As Gartner states, “Exploration of generative AI methods is growing and proving itself in a wide range of industries, including life sciences, healthcare, manufacturing, material science, media, entertainment, automotive, aerospace, defense, and energy.”

The growth of AI doesn’t stop there as we have seen in previous posts including the last one; AI-Augmented Development with GitHub’s CoPilot provides a smart AI developer pair programmer that generates source code and provides suggestions to synthesize the code based on the code general context and code comments by the human author.

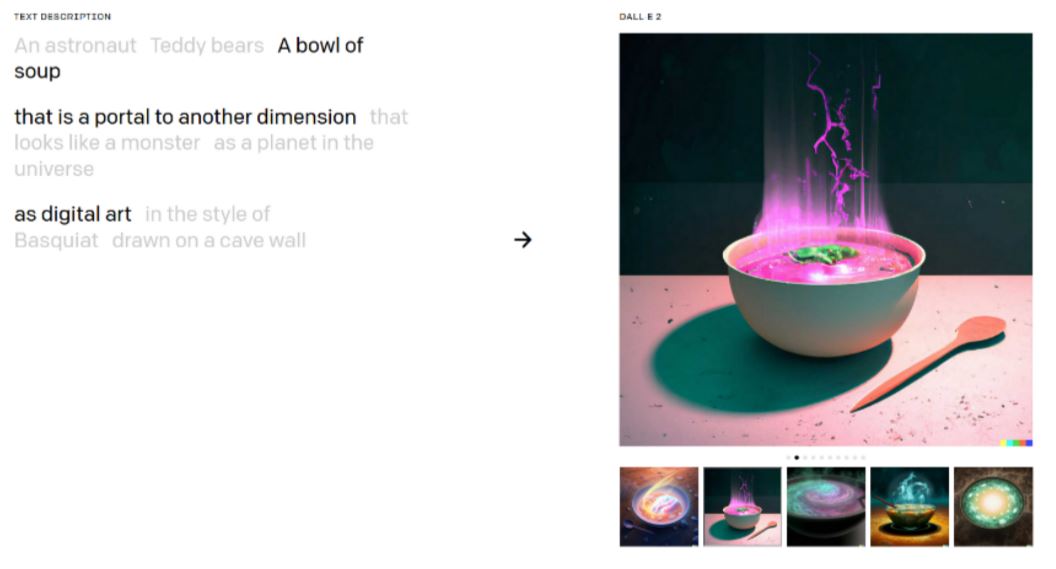

The DALL·E 2 MomentGitHub's Co-Pilot is derived from the OpenAI Codex AI system, trained with billions of public source code lines. Codex itself is a derived work from the famous transformer model GPT-3. OpenAI also used GPT-3 as the basis for a new AI system called DALL·E 2 that can create realistic images and art from pure natural language description. Since the private launch of DALL·E 2 in April 2022, many impressive results have been published. Some of them can even be tried out on their official website with a semi-interactive prompt as shown below.

Natural language text prompt as input and DALL·E 2 artistic output.

Natural language text prompt as input and DALL·E 2 artistic output.

DALL·E and DALL·E 2 were pioneers for human-like quality with transformer-based models that take simple text input and synthesize new visual content out of it. Since the success of DALL·E 2, other big tech firms and researchers have been working on similar text-to-image models. For example, Imagen provides an unprecedented degree of photorealism and Make-A-Scene uses text input and coarse sketches to generate photorealistic images.

All of these amazing systems have two things in common: They require a huge amount of data that can only be executed with the power of the cloud, and access is limited with invite-only beta deployments.

Fortunately, other teams have taken up generative AI and created smaller versions of similar text-to-image models. Midjourney is one example where a limited trial is offered via a Discord chat bot, which produced some interesting results for me. On August 16, 2022, as shown in Figure 4, I requested visuals for “An IT consultant in front of a computer being excited about AI synthetic data shown on the computer screen.”

Natural language text prompt as input and Midjourney's artistic interpretations delivered as Discord chat bot.

Natural language text prompt as input and Midjourney's artistic interpretations delivered as Discord chat bot.

Another online AI tool that can draw images from text prompt is Craiyon (formerly known as DALL·E mini). Craiyon is based on a smaller and older version of DALL·E and therefore the results lack quite behind the latest state of the art versions, as can be seen below when I requested “An IT consultant in front of a computer being excited.” The full prompt originally used with Midjourney only resulted in artifacts not close to the topic at all.

Natural language text prompt as input and Craiyon's interpretations.

Natural language text prompt as input and Craiyon's interpretations.

The latest addition to the AI stack is the Stable Diffusion image generation model implemented in DreamStudio by Stability.ai. “It understands the relationships between words to create high quality images in seconds […] The model is best at one or two concepts as the first of its kind, particularly artistic or product photos.”

Stable Diffusion's interpretations of an excited IT consultant.

Stable Diffusion's interpretations of an excited IT consultant.

The Controversy

While these new generative AI technologies are incredible advancements, such powerful tools can also be abused. Unethical actions are already occurring with Deep Fake images, videos or fraudulent voice synthesis. In fact, even the FBI issued a warning to companies saying that imposters use real-time Deep Fakes for remote job applications. Therefore, it is important to talk about Digital Ethics and Responsible AI and ensure that such services don’t get into the wrong hands. This might be another reason why most big tech companies that developed text-to-image generative AI are not providing public access to it. Fortunately, AI text-to-image and text-to-video systems are mainly used to generate positive end products like customized talking head clips purely from a text prompt as can be seen in the video below.

Artists are also eagerly following the developments of DALL·E 2 and its siblings and while some see these new generative AI transformers as another tool they can leverage for their artwork, others are concerned and think the technology might copy their style and make human artists obsolete. It is surely a controversial topic but not much different than other automation waves that have happened in the past where technology could suddenly perform things that only a human was capable of before. When photography was invented, for example, some painters were saying it was cheating. However, art is ultimately about finding a niche with a unique idea and having the right skills and tools. Now, AI is simply another tool in the toolbelt for artists and should not be seen as a competitor or copycat.

Another controversy in AI is the issue of bias which we also discussed in our Digital Ethics and Responsible AI post. Bias can lead to significant discrimination issues in AI production systems that use a non-inclusive training data set. A solution could be generative AI and synthetic data with photorealistic 3D assets that can also be used for faster model training and a more diverse data set. Basically, AI is generating the training data for another AI and since it has almost endless permutations, the data set will be more diverse and inclusive. Certainly, there is still a long way to go as you might be able to tell with the results shown in Figure 4, Figure 5 and Figure 6 where IT consultants are only generated as certain males, indicating the data set lacks diverse training data. Stating the gender explicitly helps to mitigate the obvious male bias, for example, this prompt shown in Figure 7: "Female IT consultant in front of a computer being excited about AI synthetic data shown on the computer screen.

Modified text prompt for female as input and Midjourney's output.

Modified text prompt for female as input and Midjourney's output.

Synthetic Data is not only Generative AI

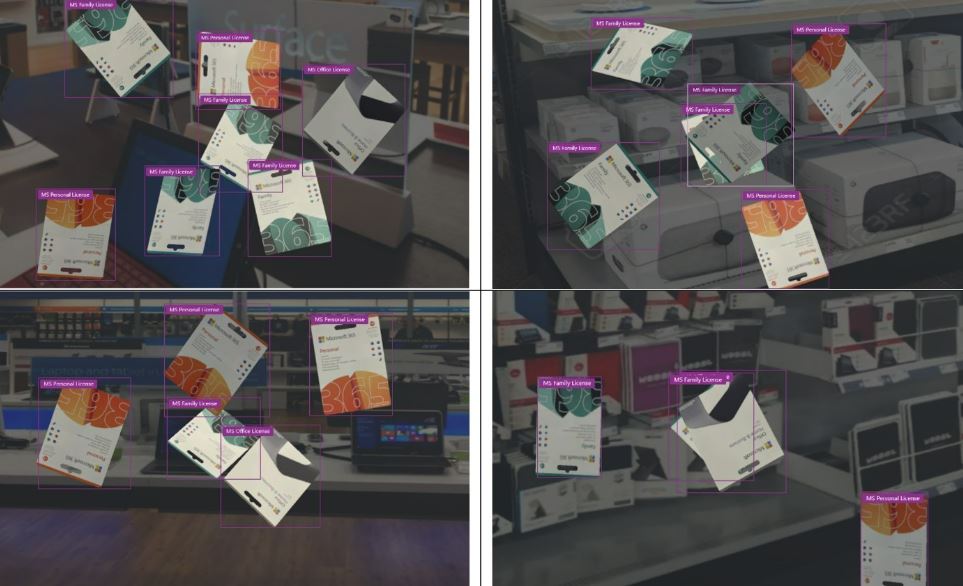

Of course, synthetic data doesn’t have to be generative text-to-image AI, but it can also be provided by hand-crafted 3D renderings with modern 3D graphics engines. This provides more control and can be automatically modified for different light conditions, camera perspectives, shapes, and sizes, creating unlimited variations from a small data set. Our immersive team at Valorem Reply has excellent expertise in the 3D rendering field to create custom synthetic training data for AI projects. In a recent project for a retail case, our team developed a system for automatically detecting issues on store shelves, such as wrong product placement. The customer was facing a challenge since their current model was trained on real-world images which meant that they were spending excess time waiting for those images to come in from local retail employees before the next iteration of training could take place. This was not only slowing them down, but they also couldn’t keep their model up to date with any changes in the field. The solution we suggested was to use synthetic data. This meant we could make any number of variations of data and a large dataset could quickly and effectively train the model as needed. In addition to making the process much more efficient, with our approach, it also made the model considerably more accurate.

Example of synthetic product data used to augment store shelves for AI training.

Example of synthetic product data used to augment store shelves for AI training.

Besides the text-to-image and related creative AI transformers, synthetic data is being used for a wide field of applications outside of visuals. For example, the explainability of AI models, which are mostly a black box with no insight, is particularly relevant for Machine Learning in finance. In order to understand the behavior of these models more in advance, they have to be tested with a wide variety of test data. In general, for AI, a large amount of training and test data is required. This is where synthetic data can help, either fully automatically or semi-automatically generated where an input dataset is being expanded and augmented with higher variance across the data set range. Synthetic data helps to build more robust, explainable models and less of a black box like NVIDIA demonstrated here.

An interesting new study by the Microsoft Generative Chemistry team in collaboration with Novartis has developed a model that demonstrates how generative models based on deep learning may aid in transforming the drug discovery process and uncovering new molecules faster. By the way, if you want to learn more about Microsoft AI, check out my fellow AI MVP and colleague’s new video series “How may A.I help you?” where Liji is covering all of AI in an approachable manner.

Another field which heavily relies on synthetic data is robotics and in general autonomous vehicles. The high diversity of our world and the endless combinations make it impossible to record every training data piece in the real-world in order to fully train a self-driving car algorithm. The advancements in photorealistic rendering techniques and Neural rendering like NeRF allow us to synthesize the data. Car manufacturers like Tesla demonstrated their impressive pipeline, and Microsoft has projects like AirSim to simulate lifelike environments for autonomous drone training and beyond. These computer vision tasks also play a big role in Spatial Computing for Mixed Reality devices such as the HoloLens, which is used for things like spatial mapping, 6 DoF, and eye and hand tracking. In fact, Microsoft used Digital Humans visualized as photorealistic 3D avatars to train the hand tracking of the HoloLens 2. This means the HoloLens hand tracking computer vision AI was trained with generated, synthetic frames and can detect real human hands in the wild. The nature of synthetic data allowed Microsoft to create endless variations of hand colors with different light, shading and shapes with perfect segmentation and labels automatically like Microsoft Research explains here.

Generative AI will be fueling the Metaverse

Generating visuals will also be an important puzzle piece for the future Metaverse platforms where lots of 3D assets are required to bring life into these virtual worlds. Upcoming 3D generative AI models can simply synthesize 3D assets from text input or refine real-world 3D scans captured with LiDAR scanners or modern mobile phones. This will allow users to become content creators for 3D just like mobile phones with apps turned many people into content creators.

The topic of the Metaverse in general is quite complex and is still very much in its infancy, which is why we created a new video series called Meta Minutes, making the Metaverse approachable for everyone. In short, bite-sized videos, expert guests talk with host René Schulte about various aspects of the Metaverse and Spatial Computing.

Although generative AI solutions are becoming easier to use, it is nowhere near consumer usability. Just navigating through the vast number of options and choosing the best approach for generative AI is not easy, but we are here to help! With Valorem Reply’s in-house AI and 3D immersive teams, we are exploring the possibilities for synthetic data to generate AI training data. If you are ready to build your own customized AI solutions with us, need synthetic data, or want to learn more, please reach out to us at marketing.valorem@reply.com to schedule a time with industry experts in the Metaverse, AI and beyond.