Valorem Reply’s Immersive team has been working on HoloLens Mixed Reality projects since 2015. In this article, R&I Leader, Rene Schulte shares some best practices and recommendations using these years of honed skills to help you avoid pit falls and find inspiration for your next MR/AR/VR projects.

This week I was scheduled to give my freshly updated Top 10 Advanced Mixed Reality Development Best Practices talk at the dev.next conference in Colorado. Unfortunately, dev.next has been postponed till August in light of the new COVID-19 pandemic to try and keep our community safe. In the meantime, I thought I’d share my Top 5 best practices for MR Developers working with HoloLens and the Microsoft MR platform specifically. The list is based on many years of experience our team has accrued working in the ever-growing and changing field of Spatial Computing as one of the first Microsoft Mixed Reality Partners.

Build cross-platform shared experiences

The AR Cloud, sometimes called Magicverse, Mirrorworld, Live Maps or Cloud Anchors, is essentially a Digital Twin of our world. A digital content layer that is persisted and mapped to real objects and locations in the physical world. This allows virtual content to be shared cross-platform between devices and over time. Combined with 5G mobile networks, this technology will be key for Spatial Computing adoption.

Lots of startups are working in this field along with the larger tech companies like Google, Facebook and Microsoft. Azure Spatial Anchors is furthest ahead, providing cross-platform support for HoloLens 1, 2, iOS ARKit and Android AR Core and true persistence.

Valorem has been working with Azure Spatial Anchors since it’s early preview to develop custom solutions for our customers. Paris Morgan from the Microsoft Azure Spatial Anchors team and I even gave a talk at Microsoft’s //build developer conference. You can listen to a recording of our talk here for a good intro to the technology with lots of practical uses cases/scenarios and some insight into developing a cross-platform shared mobile app with persisted content.

With more and more mobile devices supporting depth sensors, like time-of-flight cameras, the 3D content for AR can be created by capturing real-world objects via a 3D scan and doesn’t have to be manually created from scratch.

The video above shows a demo where I used my new Samsung S20+ with its depth camera to scan a plush toy which I then loaded as 3D model into our Azure Spatial Anchors app to place in AR. After that I saved the AR session and relocalized the anchor with its model attached. All on mobile. This is a powerful workflow for enterprise scenarios as well where one can capture a real object like an engine, machine, pipe, etc. and place it in AR in a different location. Using Azure Spatial Anchors these apps can be developed in a cross-platform, shared manner so multiple users can participate, each with their own device and the content anchor can be saved at the real-world location.

2. Learn about Deep Learning

AI Deep Learning will dramatically change how Mixed Reality apps perceive their environment. Adding intelligence to your applications helps to provide a truly immersive experience. APIs from Windows AI like WinML allow you to run Deep Neural Networks (DNN) directly on the device supporting an Intelligent Edge scenario for low latency, hardware-accelerated inference / evaluation on the edge.

The above video demonstrates an Augmented Intelligence scenario with the HoloLens 1 where a Deep Learning model runs in near real-time directly on the HoloLens. It then uses text-to-speech to tell the user what object is in front of them and how far it is away using spatial mapping. This provides an inclusive experience for visually impaired users. I already updated this app for the HoloLens 2 and it runs even faster on it. The source code is available in this GitHub repo.

In the next few years, we will see a boost of the AI accelerator chips for the Intelligent Edge that are fueling fast Deep Learning inference on client devices with low latency. These VPU/NPU accelerator chips will be in every device soon just like GPUs are now and we already see that with state-of-the-art mobile devices. With this approach we can use the best of both worlds for the two phases of a DNN/Deep Learning AI:

- Training: Train the model in the cloud with Azure Custom Vision to take advantage of cloud computing power and scale in the resource-intense training phase of the DNN.

- Inference: Run the DNN inference / evaluation on the edge device to reduce latency and access real-time results.

If you are new to AI, I’d recommend to make yourself familiar with the concepts of Deep Learning and the different model types so you are prepared for the AI revolution and software 2.0. This SIGGRAPH workshop provides an excellent introduction: http://bit.ly/dnncourse.

3. Make good use of the HoloLens 2 Instinctual Input

The Microsoft HoloLens 2 is by far the most sophisticated Spatial Computing device on the market and one of the key benefits are different input modalities like eye and hand tracking.

The HoloLens 2 provides full articulated hand tracking allowing developers to build intuitive Mixed Reality applications that naturally just work for users. I mean what’s more intuitive and cooler than touching virtual holograms with your own, real hands:

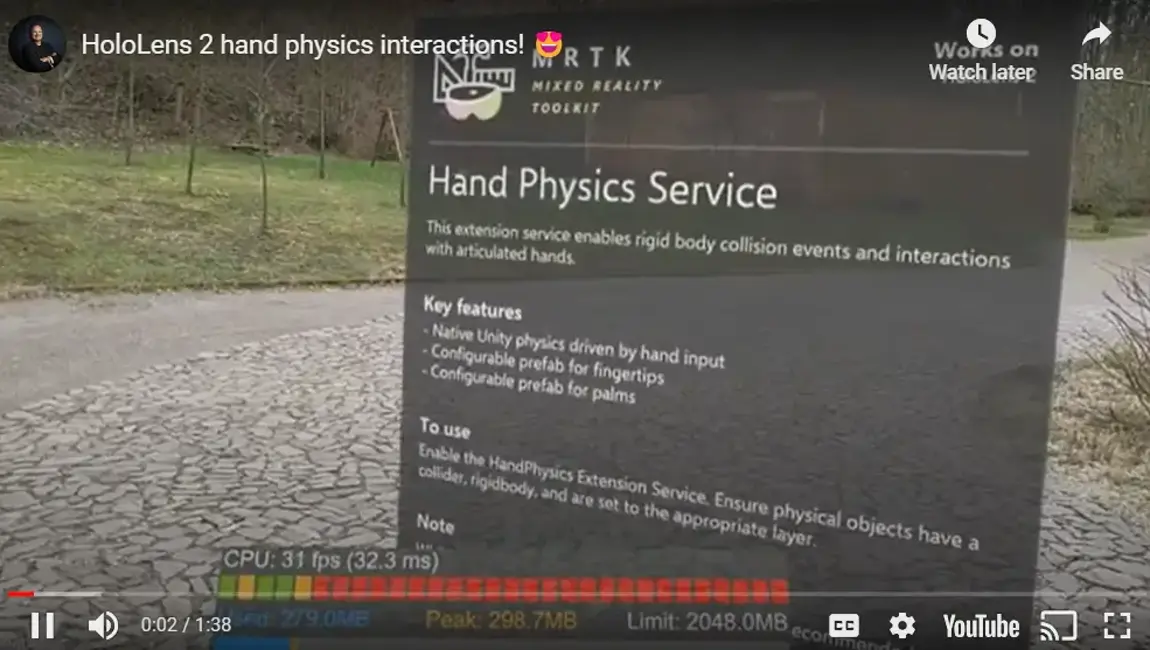

The open source Mixed Reality Toolkik (MRTK) makes it very easy to use HoloLens 2 instinctual input by providing UI controls that work with direct hand input and an abstraction layer for the hand data like the finger joints.

By the way, the MRTK supports all Mixed Reality devices and provides APIs to build a great solution for Holographic Mixed Reality devices like HoloLens as well as Immersive Mixed Reality VR headsets. Developers can leverage the increased reach of this shared platform and write apps that support both AR and VR experiences by dynamically enabling content, like a virtual environment, if the app is running on an Immersive Mixed Reality device in a VR setting.

4. Treat the HoloLens as a mobile device

The Microsoft HoloLens is fully untethered which means all the computing happens inside the device, there are no cables so users can freely walk around bringing their physical and virtual worlds together. This is a huge advantage compared to other Spatial Computing devices that require a PC for the actual computing. On the other hand, this also limits the computing power without full desktop PC graphics card power. Still your app needs to run at 60 fps to ensure a high hologram stability. Unstable holograms and rendering at 30 fps or less can not only provide a poor user experience but also make users feel sick, cause nausea and headaches, so maintaining 60 fps is crucial.

The GPU of the HoloLens is mostly fill-rate bound, which means it can easily render tens of thousands of polygons / triangles, but if too many pixels are drawn with complex processing for each, it can be too much to handle for the mobile GPU. Therefore, best practice is to:

- Use simple pixel shaders, as opposed to the Unity Standard Shader for example. Some models even look reasonably well with vertex lighting and do not require pixel-based lighting. You can get away with a very lightweight pixel shader or light baking for static objects. The Mixed Reality Toolkit includes also some highly optimized MRTK Standard shaders that can be used as an out-of-the-box solution or starting point for your own shader development.

- Avoid over-drawing and depth stacked content as each pixel must be processed many times.

- Be smart about screen space effects and do not use them. Do not bother with Screen Space Ambient Occlusion or similar effects like Depth of Field, outline shading, etc. It just takes too much computing time to render those.

You might wonder if the HoloLens 2 is more powerful than the HoloLens 1 and can render more intensively. It can but my tests have shown that the difference is minimal. This is mainly because the HoloLens 2 has twice the display / field-of-view size than the HoloLens 1 and therefore the extra graphics power is already used to render 8x more pixels.

The above video shows the fun Surfaces demo by the Microsoft Mixed Reality Design Labs that is pushing the HoloLens 2 quite a bit using some nice instinctual input with advanced shaders and rendering techniques. It’s even open source so you can take a look and get inspired.

In addition to being fill-rate bound, the polygonal size of the models is also a challenge as we developers often deal with 3D models from CAD programs that are highly tessellated. Valorem Reply’s Chris Galvanin wrote a nice blog post summarizing our hybrid approach we use when working with large 3D models that would not render well as they are on a mobile device like the HoloLens.

5. Let your apps live in the real-world

The HoloLens is packed with a suite of sensors, in particular the depth sensor that measures the distance to the real-world environment surrounding the device. The HoloLens uses that to reconstruct a spatial mesh, a polygonal model of the real-world.

Developers should leverage this spatial reconstruction to make virtual holograms part of the real-world. For example, you can position a hologram on a physical table or even let the real-world object occlude the virtual hologram.

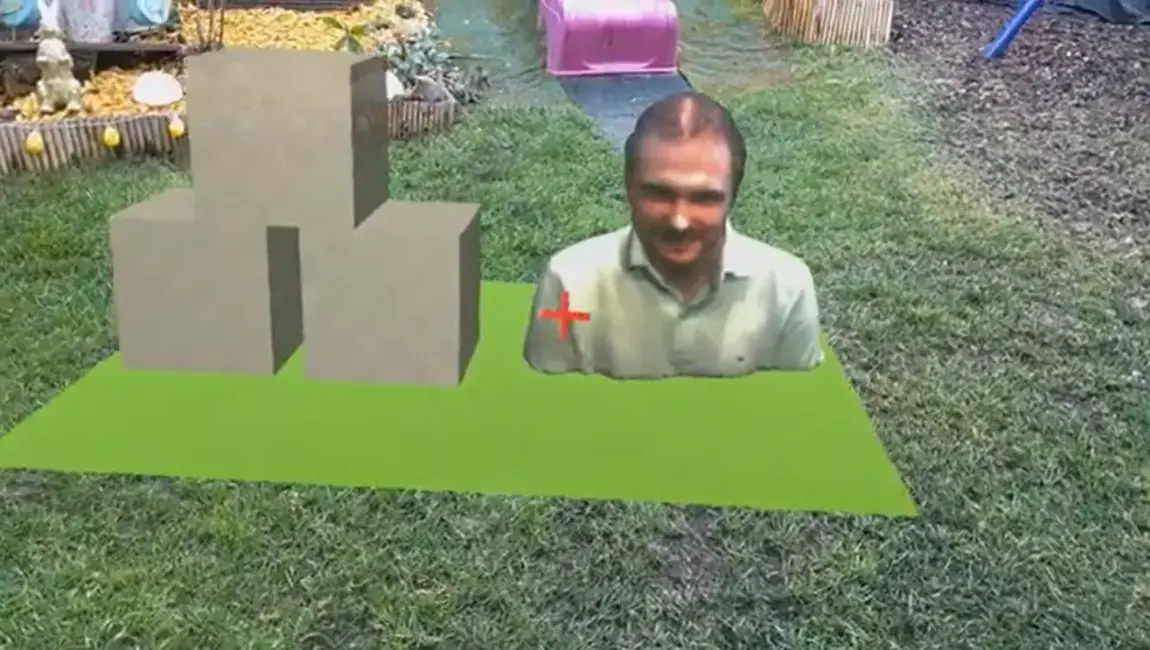

And the HoloLens 2 does an even better job with its new depth camera and increased computing power. This allows you to build some fun experiences where virtual objects physically interact with the real world. It can even distinguish on a small centimeter-range scale such as the small dent on our garden slide where the virtual spheres get stuck:

Additionally, marker-based tracking can be leveraged to create reference points in the real-world and anchor virtual holograms to physical objects. Nowadays, techniques like Vuforia Model Targets replace fiducial markers with exciting 3D models of physical objects allowing developers to create truly Mixed Reality experiences- like our Tire Explorer app which you can see in action in this video with real-world tire tracking or even try the app yourself (without a real-world tire).

The HoloLens 2 also supports Scene Understanding which analyses the Spatial Map further using semantic understanding to allow more semantically relevant placement of virtual objects on real world surfaces. We covered the HoloLens 2 Scene Understanding in detail in a previous post.

Regardless what approach you choose, make sure to avoid the floating AR sticker effect where the virtual object “hologram” is just in front of the user. Instead leverage the unique spatial understanding of the device to enrich the user experience.

We are excited to release even more Spatial Computing and more material this year. Keep an eye on our Valorem social pages for details. Already have a Spatial Computing project in mind? We would love to hear about it!

Stay safe! ❤