Microsoft's newest Azure Mixed Reality Service, Azure Remote Rendering, solves the challenge of viewing complex 3D models on the HoloLens 2 device without loosing polygon detail or spending valuable time down sampling. This article explains just why this is such a big milestone for Mixed Reality developers and answers your questions about how the new service works.

It’s an exciting time in the Azure Mixed Reality world with regular releases of groundbreaking services like Azure Spatial Anchors. Microsoft recently announced another game changing service added as public preview to the Azure portfolio: Azure Remote Rendering (ARR). This exciting resource allows you to visualize huge 3D models, with the detail of millions of polygons that would have been impossible to render locally on an untethered, mobile device like the HoloLens 2 before. However, by leveraging Azure GPU cloud rendering, streamed real-time to the HoloLens, it’s now possible!

We at Valorem Reply were fortunate enough to have had early access to the ARR private preview and have been putting it to test for a bit. In this post, I want to share more details on why ARR is another transformational technology and how it works.

Why is it such a milestone?

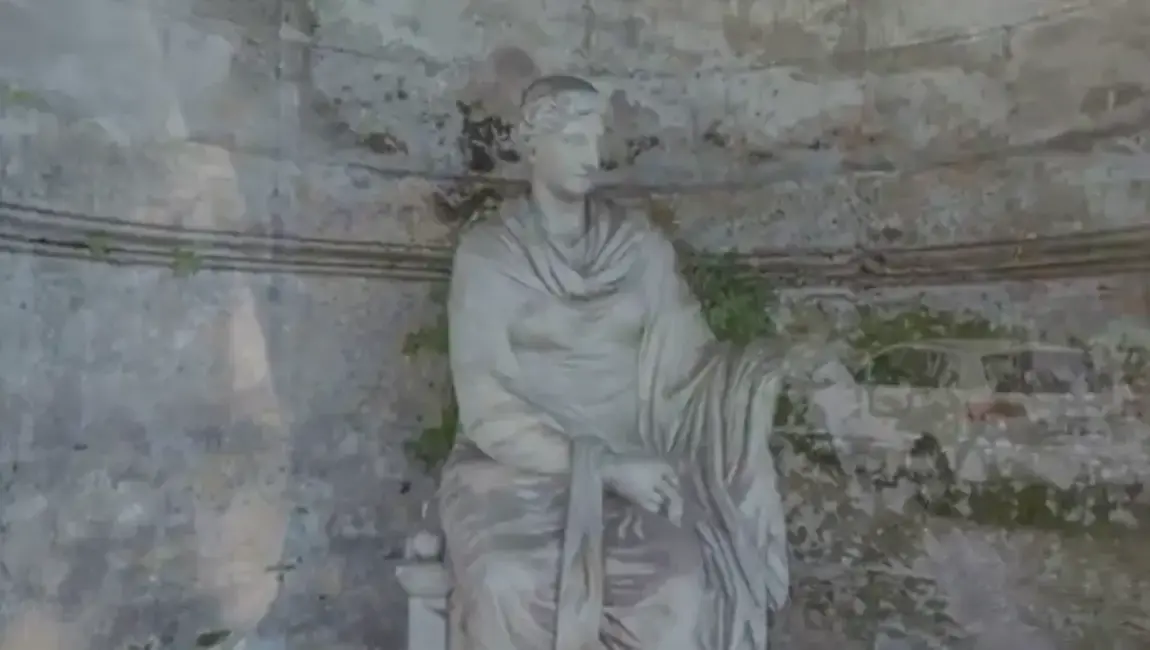

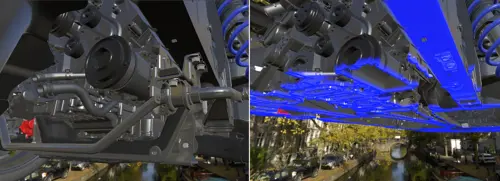

Azure Remote Rendering allows users to view complex 3D models with millions of polygons on a mobile device like the HoloLens 2. These large 3D meshes are typically generated by CAD programs and used by many companies to design their products, machines, buildings, etc. The business use cases for visualizing CAD models with Mixed Reality are endless as CAD is the de facto gold standard for 3D design. Another important application is leveraging photogrammetry 3D scans of real-world objects to act as a Digital Twin when combined with IoT. These photogrammetry 3D scans tend to have a high polygon count and are typically quite complex. Now with ARR, these can be visualized as is without a low-resolution down sampling.

The business value is high when we can use a CAD design or a 3D scan Digital Twin as is (without labor-intensive reduction steps) and show it in the context of the physical world blended in with Mixed Reality.

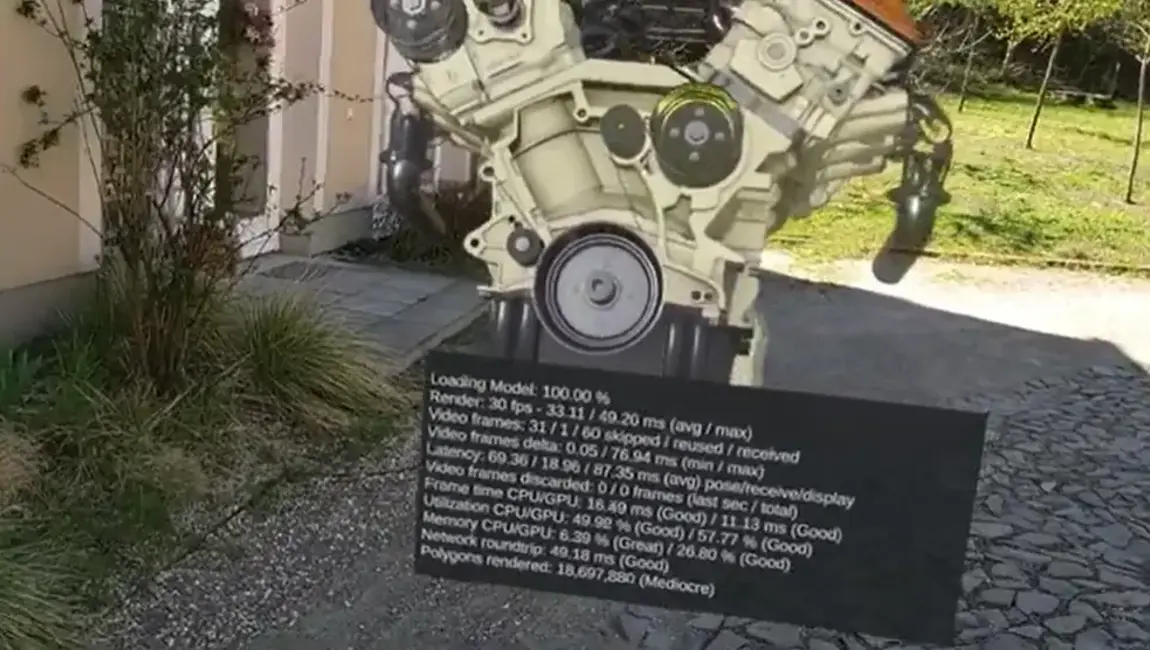

Mobile Spatial Computing devices like the HoloLens can’t render these complex 3D models as they are since it requires a powerful PC desktop graphics card. That means one has to use a 3D mesh simplification method to reduce the size of these 3D models. A mesh reduction by default means information loss because lots of polygons need to be removed. If a use case doesn’t require all details to be provided in a HoloLens visualization, this may not be a problem however, for the most part, you want to see all details. ARR is filling that gap by leveraging cloud rendering with real-time streaming and allowing us to visualize these complex models with all the details, without a time-consuming mesh reduction processes and information loss. ARR also allows developers to use resource-intensive, physically based rendering (PBR) shading materials for more photo-realistic visualization that would also not be possible on a mobile device but are common for powerful desktop graphics cards in use today.

Remote Rendering is a key technology for future Spatial Computing devices with small and lightweight form factors and ARR is capable to perform photo-realistic rendering of 3D models with hundreds of millions of polygons on a Spatial Computing device like the HoloLens 2.

How does it work?

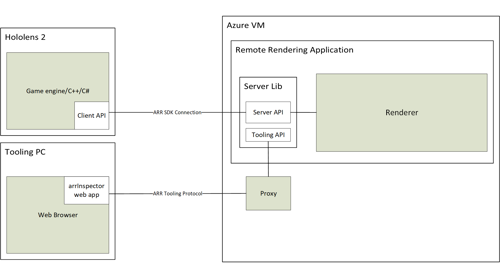

The HoloLens Mixed Reality runtime supports a technology called Holographic Remoting which allows developers to render a whole 3D scene on a PC and stream the result back to the HoloLens. In order to accomplish that, the HoloLens Remoting Player app connects to a PC via Wi-Fi and sends the HoloLens input like head position, rotation, hand input, etc. to the PC. On the PC a specially built UWP application processes that HoloLens information, renders the scene on the PC based on the input parameters and streams the resulting stereo frames back to the HoloLens where it is being shown.

Azure Remote Rendering High-level architecture

Azure Remote Rendering (ARR) takes this to the next level. First of all, you don’t need to have a specially built PC app to render the scene in your local Wi-Fi network. ARR renders the scene (or parts of it) with powerful Azure GPU Virtual Machines. Therefore one does not need to manage a powerful PC in their own local network and does not have to build a special Holographic Remoting UWP app that needs to run in-sync with the HoloLens app. ARR manages all of that using multi-GPU cloud computing. And, even better, ARR provides a hybrid rendering approach where one can render parts locally - like a custom UI - to provide functionality to the user and have only the complex model rendered remotely in the cloud. ARR then automatically combines the locally rendered content with the remote frame, including correct occlusion. Best of both worlds. You can read more details about the architecture and the processing cycle here.

How well does it work?

In one word: astonishing!

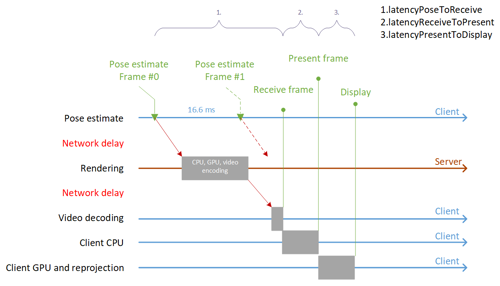

As you can imagine the processing cycle with sending input, rendering remotely, and streaming back, typically means quite a delay and therefore latency which you really want to avoid with real-time rendering. The ARR team did an amazing job to compensate for the latency with smart remote rendering techniques using pose estimation and leveraging more hardware features of the HoloLens 2 like late stage reprojection. Therefore, it only works with a HoloLens 2 or Windows 10 PC as client.

I think the results shown in my demo videos above speak for itself. One can even interact with the remotely rendered model and switch parts of the model hierarchy on/off, tint parts, use outlines, cut planes and many more interactive, dynamic features. The rendered result can be seen in an amazing PBR quality without any perceptible delay which is a huge milestone for remote rendering.

What network is required and what about 5G?

Of course real-time remote rendering requires a low-latency network connection with sufficient bandwidth. Microsoft recommends 50 Mbps downstream and 10 Mbps upstream. In my tests though it just used 15-20 Mbps downstream for a model with 18.7 million polygons, so it would even work over a 4G mobile network (but I’d rather stick with the official recommendation).

It is worth mentioning that 5G will be a big accelerator for these use cases as 5G provides large bandwidth and even more important, features like dedicated slices and other improvements that guarantee bandwidth and low-latency connectivity.

What are the other conditions?

The Azure region also matters a lot for low-latency rendering. The Azure remote rendering service is currently supported in these Azure regions. When building an app with ARR one should make sure to configure a region that is closest to where the app is being used.

The remote rendering engine can’t work directly with CAD or tessellated formats like FBX or glTF and requires a conversion to a proprietary ARR binary format. Fortunately, the conversion can be automated with a custom pipeline that could be built as part of a custom project.

Another factor to consider are the Azure GPU Virtual Machines that are being used under-the-hood and where the remote 3D engine is running to render the remote image. These are not free of course but the pricing is reasonable and starts at $3/hour as of April 2020.

Also note these VMs need some time to spin up to avoid generating cost when idling. Therefore if the app is started for the first time in a while it can take a minute or two until the remote rendering service is connected. But once connected and the initial handshake is done, it’s unbelievably fast and the results are indeed real-time.

How to get started?

The Azure Remote Rendering documentation is a great way to get started providing tutorials and a Quickstart with an Unity project on GitHub. Keep in mind that ARR is in public preview. The documentation and the backend had a few bugs when using custom models that I helped to uncover but the ARR team was fast to fix those and made sure the Quickstart works well now.

One still needs to be quite familiar with 3D rendering and experienced with Azure and HoloLens development to get started with Azure Remote Rendering. We are here to help with our experienced team of Azure and HoloLens developers, artists, and designers. If you have a Spatial Computing project in mind with or without Remote Rendering, we would love to hear about it! Reach out to us as marketing@valorem.com to schedule time with one of our industry experts.