Our #SummerofAI series with Global R&I Director, Rene Schulte, continues. This month we cover the ethical implications of AI and the need for Responsible AI.

The rise of Artificial Intelligence (AI) solutions continues to surface in almost every aspect of our lives. This includes not just in the professional world with Industrial IoT (IIoT), robotics, automation, and more use cases but AI being interweaved into our day-to-day personal life as well. This could be in the form of smart phone apps, assistants, bots and even right now, where I write this text with Microsoft Word leveraging smart AI text predictions.

In our recent #SummerOfAi posts about Intelligent Edge AI Video Analytics and Democratization of AI we shared business indicators and real-world use cases showing the disruptive nature of AI and how it can now be easily developed without prior data science or software development knowledge with low-code / no-code AI solutions. As the saying goes, with great power but comes great responsibility, so in this post we will continue our #SummerofAI theme and take a deeper look at the ethical implications of the AI revolution and how responsible AI usage must be ensured for trustworthy, successful business outcomes.

The Negative Side of the AI Revolution

AI tech has made tremendous progress and we now have massive AI models like transformers with billions of parameters. For example, Microsoft’s Turing or GPT-3 which can perform incredible tasks like producing human-like text, images and more. Of course, AI also has implications for abuse and can lead to negative consequences like Deep Fakes and fake Neural Voice Synthesis where victim’s visual and even audio appearance can be replicated.

As usual, there are two sides of the coin and Deep Fake technology with facial reenactment like the example above can be quite fun and actually useful for business. For example, with generating custom talking head videos just from simple text input, marketing teams could create content at scale. In the video below, you can see one example where I just provided the text and background graphic, then selected a speaker persona, language and after a short time got this nice video fully automatically generated:

Fortunately most use cases for AI are inherently good and can even help to save lives with AI for Health but of course powerful tools like AI can also be abused. Even worse, bad things can happen even if AI models are just used as intended and not actively misused. Trained data contains a non-inclusive set, introducing bias when the AI model is leveraged during the interference / evaluation for predictions. For example, one tech giant used a recruiting tool designed to screen resumes and filter out only the best candidates based on Machine Learning. As it turned out the training data set introduced a bias that preferred male over female applicants. This was not done with bad intent by the developers of the AI model, the male dominance across the tech industry introduced more male data in the training data set.

Another recent example of AI gone bad, was Twitter’s photo-cropping algorithm which suggested Twitter users favored white faces over black faces. Twitter launched a bug bounty to analyze the bias problem more closely and just announced the results of the competition. The winning entry showed that Twitter’s automatic photo-cropping favors faces that are “slim, young, of light or warm skin color and smooth skin texture, and with stereotypically feminine facial traits.” Likely a result of the coding being done by a sole author, thus bringing their biases about people's appearances such as preconceptions about femininity and age. Other entries suggested that the photo-cropping system has a bias against people with grey or white hair and favors English over Arabic text in images introducing cultural and age discrimination.

A research group from the University of Toronto has analyzed many more systems with their free bias analytics service and came to the conclusion that “Almost every AI system we tested has some sort of significant bias”.

Responsible AI is Essential for each Business

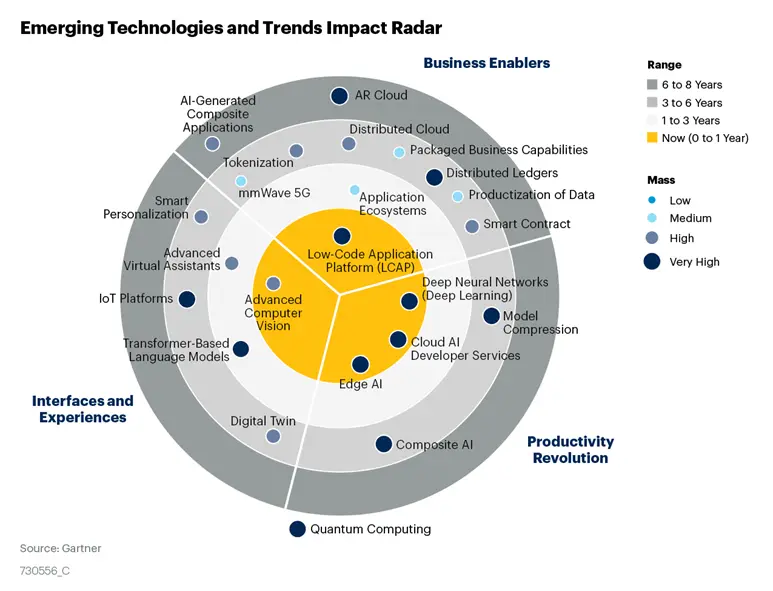

The above cases raised significant public outrage (on social media) and fueled AI skepticism further driving the topic of responsible AI. In fact, market research firm Gartner features it on their emerging tech hype cycle and recently released an article on How to Make AI Trustworthy. Algorithmic trust and Digital Ethics should be key ingredients of any AI projects to prevent such issues from happening and mitigate biases early, before it’s too late. This can be done with Responsible AI practices which describe business and ethical considerations when adopting AI including business and societal value, transparency, risk, fairness, trust, bias mitigation, explainability, accountability, safety, and privacy. Responsible AI operationalizes organizational responsibility and practices that ensure positive and accountable AI development and exploitation.

Figure 2: Gartner Emerging Technologies Trend Impact Radar highlighting AI at the center of maturity (Source: https://blogs.gartner.com/tuong-nguyen/2020/12/07/gartner-launches-emerging-technologies-radar-2021)

Figure 2: Gartner Emerging Technologies Trend Impact Radar highlighting AI at the center of maturity (Source: https://blogs.gartner.com/tuong-nguyen/2020/12/07/gartner-launches-emerging-technologies-radar-2021)

Typically, AI governance is practiced by designated teams, but Responsible AI applies to everyone who works with AI. With the increased AI maturity in organizations, defined methods and roles that operationalize AI principles are a must. Analysts’ advise business leaders to implement Responsible AI and many large tech companies and governments are already establishing those practices, including:

- Refined processes for handling AI-related business decisions and a defined champion accountable for the responsible development of AI.

- AI review and validation process requiring everyone in the process must defend their decisions in front of their peers.

- Oversight committees of independent and respected people within the organization with various backgrounds, not only AI experts.

- Education and training on Responsible AI, especially for the domain experts tipping their toes into AI development with low-code and no-code AI solutions. In fact, analysts from Gartner predict that “By 2023, all personnel hired for AI development and training work will have to demonstrate expertise in responsible AI development.”

- Last but not least, humans should always be in the loop to mitigate AI deficiencies with a well-defined escalation procedure.

In addition to organizational digital ethics and algorithmic trust, regulatory compliance will play an important role in Responsible AI as governments will likely introduce regulation in the near future. This might lead to something like GDPR and just like with GDPR, business leaders should be preparing now for compliance considerations in the future.

Of course, security and privacy will also play a big role in future AI implementations. In a recent Gartner survey, companies that are deploying AI cited security and privacy as their top barriers for implementation. The majority of security attacks against classical software can also be a threat to AI, therefore the same risk and security management solutions can also be applied to reduce the damage caused by malicious users who compromise AI environments and introduce errors in AI systems. Protecting sensitive data from being stolen or altered, which can introduce bias, also helps protecting against Digital Ethics and Responsible AI issues from maliciously modified, biased model training.

Figure 3: Recommended security measures for AI by Gartner. (Source: https://www.gartner.com/smarterwithgartner/how-to-make-ai-trustworthy/)

Figure 3: Recommended security measures for AI by Gartner. (Source: https://www.gartner.com/smarterwithgartner/how-to-make-ai-trustworthy/)

Adopting Responsible AI and security measures is essential to counteract the mistrust in AI solutions and today’s often low confidence in AI’s positive impact. Responsible AI helps organizations to go beyond the pure technical AI progress, to build trust, balance risk and add value to ensure successful business outcomes.

How to Ensure Responsible AI and Digital Ethics

Many large tech companies and faculties are making Responsible and Ethical AI usage a top priority and are establishing AI principles and working groups. For example, Microsoft created these working groups:

- Office of Responsible AI (ORA)- sets the rules and defines the governance processes, together with teams across the company.

- AI, Ethics, and Effects in Engineering and Research (Aether) Committee- advises Microsoft’s leadership on the opportunities and challenges presented by innovative AI.

- Responsible AI Strategy in Engineering (RAISE)- a team for enabling engineering groups across the company with the implementation of Microsoft’s Responsible AI rules.

- Microsoft Fairness, Accountability, Transparency, and Ethics in AI Research team (FATE)- a cross functional, diverse group from the fields of sociotechnical orientation, such as HCI, information science, sociology, anthropology, science and technology studies, media studies, political science, and law that studies the societal implications of artificial intelligence (AI), machine learning (ML), and natural language processing (NLP). The aim is to facilitate innovative and responsible computational techniques while prioritizing issues of fairness, accountability, transparency, and ethics as they relate to AI, ML, and NLP.

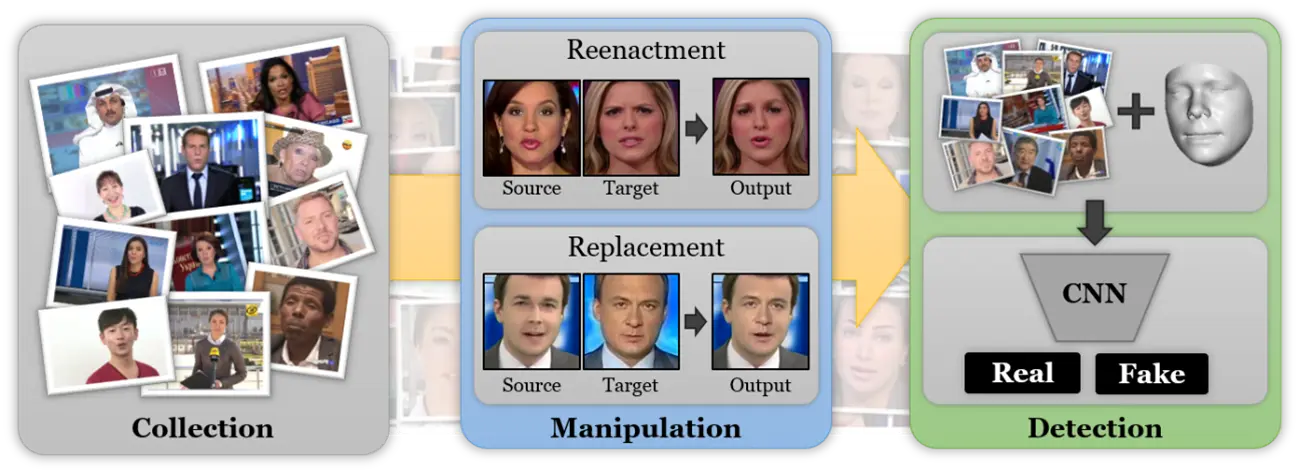

In addition to ethical AI principles and human oversight, there’s also great research to counteract AI misuse with algorithms. For example, FaceForensics++ and other projects by Microsoft that detect Deep Fakes and alike with.

Figure 4: How FaceForensics++ works to detect Deep Fakes manipulations like facial reenactment or replacement. (Source: https://github.com/ondyari/FaceForensics)

Figure 4: How FaceForensics++ works to detect Deep Fakes manipulations like facial reenactment or replacement. (Source: https://github.com/ondyari/FaceForensics)

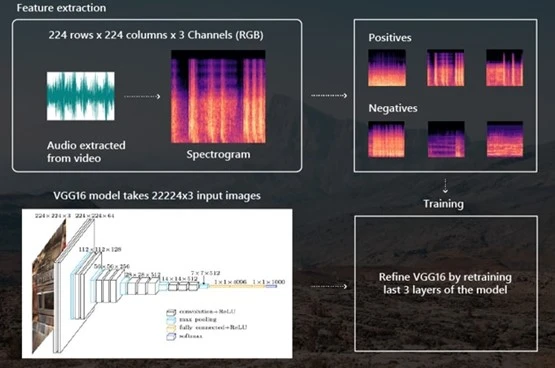

Microsoft also advocates the responsible usage of AI systems as part of the AI for Good initiatives like AI for Health, AI for Earth, AI for Accessibility, AI for Cultural Heritage or even AI for Humanitarian Action. One great example of AI for Humanitarian Action is the usage of AI models to detect illegal weapons like cluster bombs prohibited under international war. This can be done visually with computer vision analyzing archives like Mnemonic, a charity database that preserves video documenting war crimes and human rights violations. Another way is to use acoustic pattern recognition with an AI model that detects distinctive sounds like the popping when a cluster bomb explodes. This will help investigators to more easily identify and document the use of banned weapons from the endless amount of video / audio sources shared on social media and other channels.

Figure 5: The AI model architecture for detecting distinctive acoustics. (Source: https://www.microsoft.com/en-us/ai/ai-for-humanitarian-action-projects?activetab=pivot1:primaryr7)

Figure 5: The AI model architecture for detecting distinctive acoustics. (Source: https://www.microsoft.com/en-us/ai/ai-for-humanitarian-action-projects?activetab=pivot1:primaryr7)

Microsoft and the AI community is also working on frameworks and tools for AI developers like Counterfit which helps organizations assess AI security risks and allows developers to build robust, reliable, and trustworthy algorithms. Fairlearn is helping to make typical black box AI models more transparent to identify and mitigate bias early. Another open-source tool is InterpretML which can be used for training interpretable machine learning models in a glass box style and explain black box models. Interpretability is needed to understand fairness, creating more robust models with better object understanding, to debug and enable auditing. There’s even GPU-accelerated model interpretability now with RAPIDS and Interpret-community packages.

Just like our partner Microsoft, Valorem Reply is committed to responsible development and leveraging new frameworks and tools that become available to ensure these commitments. As the #SummerOfAI articles have shown, the demand for intelligent solutions requiring specialized skills and Digital Ethics is growing further. Responsible AI is essential for every business that wants to successfully adopt AI solutions that are grounded in trust with highest privacy and security. Navigating the choices and choosing the best approach and frameworks for your need is not easy, but we are here to help! If you are ready to build your own AI solutions, need assistance identifying opportunities to grow or improve your organization through AI, or simply want to learn more about how AI is helping others in your industry, Valorem Reply’s Data & AI team can help. Email marketing@valorem.com to schedule time with one of our industry experts.